Toxicology research hinges on robust data analysis. ToxGenie streamlines this process, offering tools to simplify toxicology data analysis while ensuring compliance with regulations like OECD and EPA. In this post, we explore the workflows for Point Estimation and Hypothesis Testing, illustrating how ToxGenie empowers researchers to achieve accurate results efficiently.

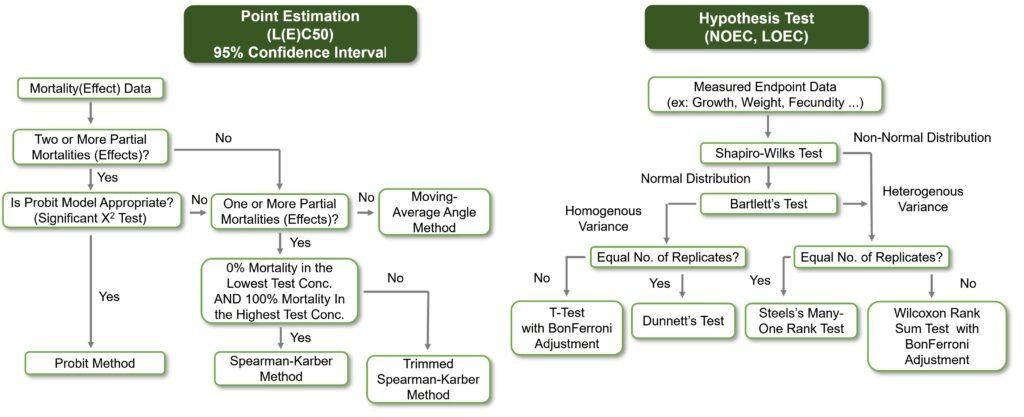

Point Estimation is the most commonly used method for analyzing quantal data, such as survival or mortality outcomes, in toxicology studies. While Probit and Logit analyses are standard approaches, many commercial statistical software packages do not support alternative methods like the Moving-Average Angle Method, Spearman-Karber Method, and Trimmed Spearman-Karber Method.

In practice, acute toxicity tests—typically involving 5–6 experimental concentrations (including a control) with low numbers of test organisms per concentration—often fail to produce reliable results for Probit or Logit analysis. To address this, ToxGenie supports a wide range of Point Estimation methods, including the Moving-Average Angle Method and Trimmed Spearman-Karber Method, which can analyze data even when there are fewer than two partial effect concentrations between 0% and 100% effect levels. Additionally, ToxGenie enables estimation of IC25 and IC50 (inhibition concentrations based on quantitative data) with 95% confidence limits, critical for cytotoxicity studies. These features help researchers obtain accurate results quickly. Explore ToxGenie’s analysis tools to learn more.

Hypothesis Testing: Tackling Challenges in Toxicology Data Analysis

A major point of contention between toxicologists and statisticians is the number of replicates per experimental concentration. Ideally, researchers aim to maximize replicates, but practical constraints in toxicity testing often make this challenging. To ensure reliable results, ToxGenie requires a minimum of three replicates per concentration, flagging datasets with fewer replicates as errors during analysis. This safeguard enhances the credibility of your findings.

Another key challenge is data transformation. Datasets with low replicate numbers often struggle to meet normality and homogeneity requirements, even with transformations tailored for toxicology data. ToxGenie addresses this by automatically applying appropriate transformation methods (e.g., log or arcsine transformations) based on the data’s characteristics. This powerful feature boosts analysis accuracy without requiring manual preprocessing. Discover how ToxGenie’s tools simplify your workflow.

Hypothesis Testing Workflow with ToxGenie

- Data Preparation: Input data with at least three replicates per experimental concentration.

- Automated Transformation: ToxGenie applies suitable transformations (e.g., log, arcsine) to optimize data quality.

- Normality Testing: Perform tests like Shapiro-Wilk to verify data normality, ensuring valid statistical assumptions.

- Homogeneity of Variance Testing: Conduct tests like Levene’s to confirm equal variances across groups.

- Statistical Testing: Run robust tests (e.g., t-tests, ANOVA for parametric data, or non-parametric alternatives like Mann-Whitney U) based on normality and variance results.

- Result Interpretation: Visualize results and generate regulatory-compliant reports.

Start Analyzing with ToxGenie

ToxGenie simplifies complex toxicity data statistical analysis, making it ideal for both beginners and seasoned researchers. Experience it firsthand with a 30-day free trial. Take the first step toward revolutionizing your toxicology research with ToxGenie!